Standard chatbots have a limit. Even powerful AI models struggle when you ask them to do too many different things at once. If you ask a single AI to query a database, perform calculations, and write a summary all in one go, it often makes mistakes or gets confused.

The solution is Multi-Agent Systems.

Instead of relying on one AI to do everything, we create a team of specialized agents. One agent handles the math, another handles the writing, and a “Supervisor” manages them both.

In this guide, we will walk through building a complete End-to-End Application—from the AWS Bedrock backend to a Streamlit frontend—where a Supervisor Agent manages a team of specialized workers to solve complex user problems.

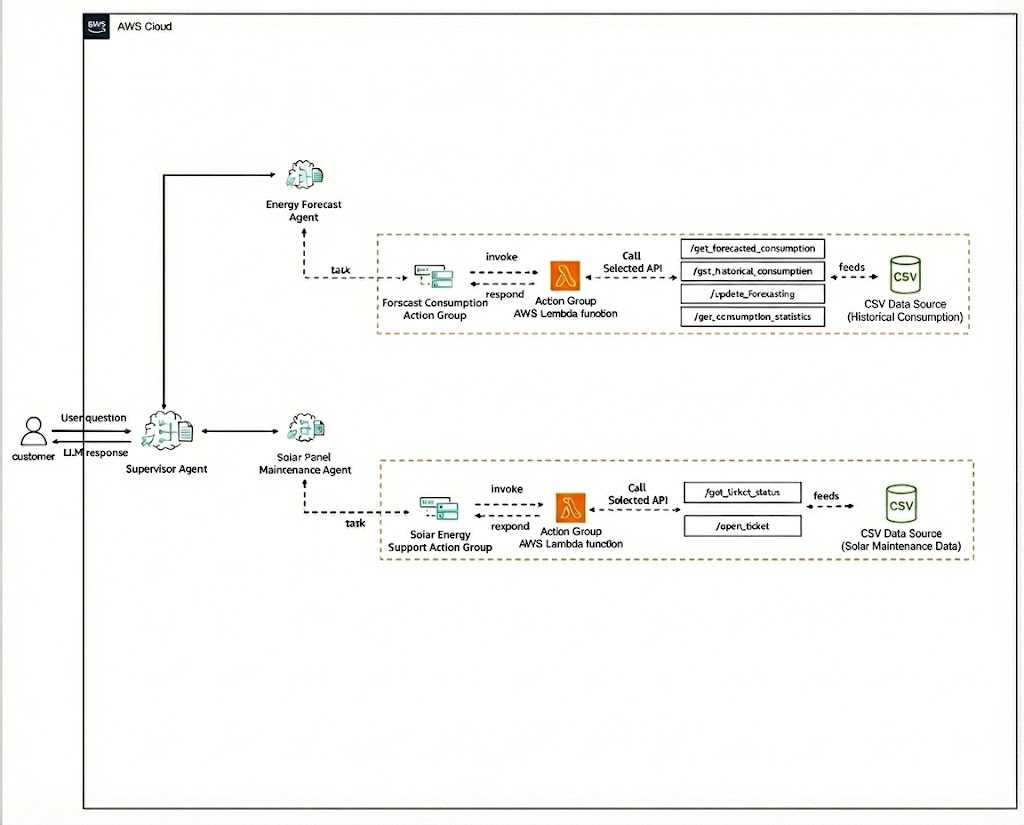

The Architecture: The “Brain” (Backend)

We will use Amazon Bedrock Agents with the new Multi-Agent Collaboration feature. We split the logic into three distinct entities:

1. The Workers (Specialists)

These agents are narrowly focused and equipped with specific tools.

- Agent A: The Forecasting Agent (The “Left Brain”)

- Role: Handles data, numbers, and logic.

- Tool: Connected to a Lambda function that queries a DynamoDB database for historical energy usage.

- Superpower: It uses specific instructions to calculate trends and predict future usage based on hard data.

- Agent B: The Solar Support Agent (The “Right Brain”)

- Role: Handles technical support and qualitative questions.

- Tool: Connected to a Lambda function that acts as a Knowledge Base (RAG) containing technical manuals.

- Superpower: It searches for specific installation or maintenance procedures.

2. The Supervisor (The Orchestrator)

This is the most critical component. The Supervisor Agent has no tools of its own. Its sole job is to:

- Analyze the user’s intent.

- Route the work to the correct Worker Agent.

- Synthesize the answers back to the user.

The Backend Code (Python/Boto3)

Here is how we programmatically define this relationship using the AWS SDK (boto3). The magic happens in the associate_agent_collaborator call.

Python

import boto3

bedrock_agent = boto3.client('bedrock-agent')

def create_supervisor_system(forecast_alias_arn, solar_alias_arn):

# 1. Create the Supervisor Agent

instruction = """

You are the Supervisor.

- If the user asks about data, bills, or math, call the ForecastingAgent.

- If the user asks about maintenance or manuals, call the SolarPanelAgent.

- If the user asks a complex question involving BOTH, call both and combine the answers.

"""

resp = bedrock_agent.create_agent(

agentName="Energy-Supervisor",

foundationModel="anthropic.claude-3-5-sonnet-20240620-v1:0",

instruction=instruction,

agentCollaboration='SUPERVISOR' # <--- Enables Orchestration

)

supervisor_id = resp['agent']['agentId']

# 2. Link the Workers (Collaborators)

# Link Forecasting Agent

bedrock_agent.associate_agent_collaborator(

agentId=supervisor_id,

agentVersion='DRAFT',

agentDescriptor={'aliasArn': forecast_alias_arn},

collaboratorName='ForecastingAgent',

collaborationInstruction='Use this agent for retrieving historical data and calculating forecasts.',

relayConversationHistory='TO_COLLABORATOR' # Shares context

)

# Link Solar Agent

bedrock_agent.associate_agent_collaborator(

agentId=supervisor_id,

agentVersion='DRAFT',

agentDescriptor={'aliasArn': solar_alias_arn},

collaboratorName='SolarPanelAgent',

collaborationInstruction='Use this agent for technical manual searches and maintenance guides.',

relayConversationHistory='TO_COLLABORATOR'

)

return supervisor_id

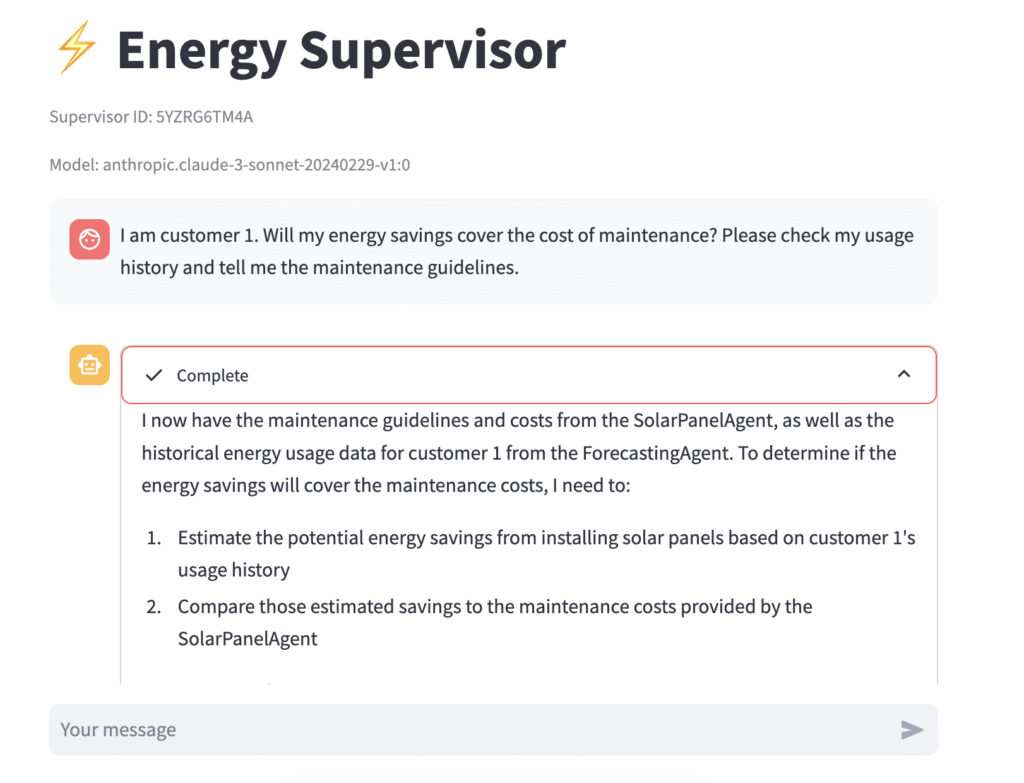

The Visualization: The “Face” (Frontend)

Building the backend is only half the battle. If a user asks a complex question, the system might take 10-20 seconds to “think,” consult Agent A, consult Agent B, and synthesize the result.

If the screen simply freezes, the user will leave.

We use Streamlit to visualize the Chain of Thought. By tapping into the Bedrock enableTrace=True parameter, we can show the user exactly which agent is working on their request in real-time.

The Frontend Code (Streamlit)

Here is how to build the UI that reveals the multi-agent orchestration:

Python

import streamlit as st

import boto3

import uuid

# Configuration

SUPERVISOR_ID = "YOUR_SUPERVISOR_ID"

REGION = "us-east-1"

st.set_page_config(page_title="Agent Team", page_icon="⚡")

st.title("⚡ Energy Workforce Orchestrator")

# Initialize Client

if "client" not in st.session_state:

st.session_state.client = boto3.client('bedrock-agent-runtime', region_name=REGION)

# Chat Interface

if prompt := st.chat_input("Ask about energy forecasts or maintenance..."):

st.chat_message("user").write(prompt)

with st.chat_message("assistant"):

message_placeholder = st.empty()

full_response = ""

# The "Thinking" Container

with st.status("🤖 Orchestrating Agents...", expanded=True) as status:

try:

# Invoke the Supervisor

response = st.session_state.client.invoke_agent(

agentId=SUPERVISOR_ID,

agentAliasId="TSTALIASID", # Uses Draft version

sessionId=st.session_state.session_id,

inputText=prompt,

enableTrace=True # <--- CRITICAL for visualization

)

# Parse the Event Stream

for event in response['completion']:

# 1. Capture the "Thought Process" (Traces)

if 'trace' in event:

trace = event['trace']

if 'orchestrationTrace' in trace:

orch = trace['orchestrationTrace']

# Detect when Supervisor calls a Sub-Agent

if 'invocationInput' in orch:

if 'agentCollaboratorInvocationInput' in orch['invocationInput']:

collaborator = orch['invocationInput']['agentCollaboratorInvocationInput']['agentCollaboratorName']

st.write(f"🔄 **Supervisor:** Delegating task to **{collaborator}**...")

# 2. Capture the Final Answer (Chunks)

if 'chunk' in event:

chunk = event['chunk']['bytes'].decode('utf-8')

full_response += chunk

message_placeholder.markdown(full_response + "▌")

status.update(label="Complete", state="complete", expanded=False)

except Exception as e:

st.error(f"Error: {e}")

status.update(label="Failed", state="error")

message_placeholder.markdown(full_response)

Realizing the Benefits

By combining AWS Bedrock’s backend orchestration with Streamlit’s frontend visualization, you unlock three key benefits for production Gen AI apps:

- Trust through Transparency: In a “Black Box” LLM, hallucinations are hard to spot. In this system, if the “Forecasting Agent” is called but returns no data, the user sees exactly where the process failed in the Streamlit status box.

- Separation of Concerns: You can update the “Solar Agent’s” knowledge base (e.g., upload new PDF manuals) without touching the “Forecasting Agent” or the Supervisor’s logic. This makes maintenance scalable.

- Latency Masking: The dynamic Streamlit UI keeps the user engaged. Seeing “Supervisor is delegating to Analyst…” provides immediate feedback, making the wait time feel shorter and more productive.

Conclusion

Multi-Agent systems represent the next evolution of Generative AI. By moving from a single prompt to a coordinated workforce, we can build applications that are more accurate, robust, and capable of handling complex, real-world tasks.

With AWS Bedrock handling the heavy lifting of orchestration and Streamlit providing the window into the agent’s mind, developers can ship enterprise-grade agentic workflows faster than ever.