Executive Summary

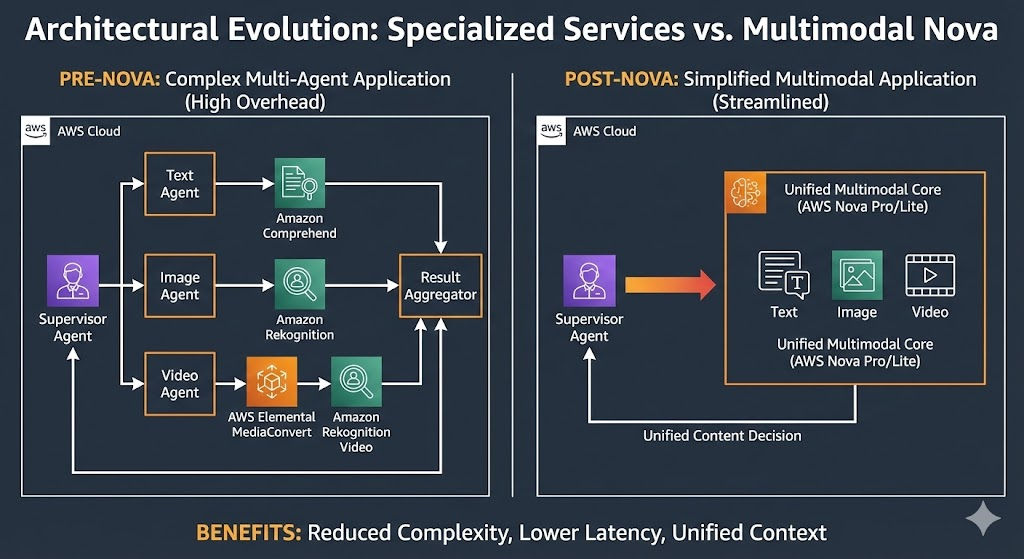

Content moderation is undergoing a fundamental shift, moving from brittle, siloed solutions (like Amazon Rekognition and Comprehend) to unified, intelligent Multimodal Large Language Model (MLLM) Agents. This strategic pivot is necessary to close the “Nuance Gap”—the inability of legacy systems to understand the context, intent, and relationship between text, images, and video. Our architectural blueprint outlines a robust, hybrid approach that balances the power of cloud LLMs (AWS Bedrock) with the cost-effectiveness of local resources (Ollama).

1. The Problem: The Failure of Siloed Moderation

The Business Pain Point

Many platforms still rely on specialized AI services, creating a “bag of words” or “bag of pixels” approach. This fragmentation is the root cause of the “Nuance Gap”.

- Siloed Operations: Systems like Amazon Rekognition (image analysis) and Amazon Comprehend (text analysis) operate independently. They lack the ability to communicate and reason about the relationship between a visual element and its accompanying text.

- Costly Errors: This failure leads to:

- High False Positives: Satirical or historical content is flagged incorrectly because context is missed, wasting budget on manual human review.

- Dangerous False Negatives: Multimodal toxicity, such as coded slang paired with an innocent image, slips through because neither system connects the dots.

2. The Solution: Multimodal LLMs and Agentic AI

The Paradigm Shift

The solution is a strategic pivot to Multimodal Foundation Models (MFMs), such as those in the AWS Nova family on Amazon Bedrock. These models are natively engineered to process text, image, and video data concurrently in a single request, deriving meaning and intent from their synthesis. This radically simplifies the future architecture.

The Agent Framework

To transform an LLM into a reliable enforcement tool, we wrap it in an Agent Framework:

- An Agent is defined as an LLM (Brain) given a specific Role (e.g., “Expert Moderator”) and access to Tools (Capabilities).

- We use the Strands SDK to define our moderation logic as a portable

@tool. This makes the logic reusable across any larger multi-agent application.

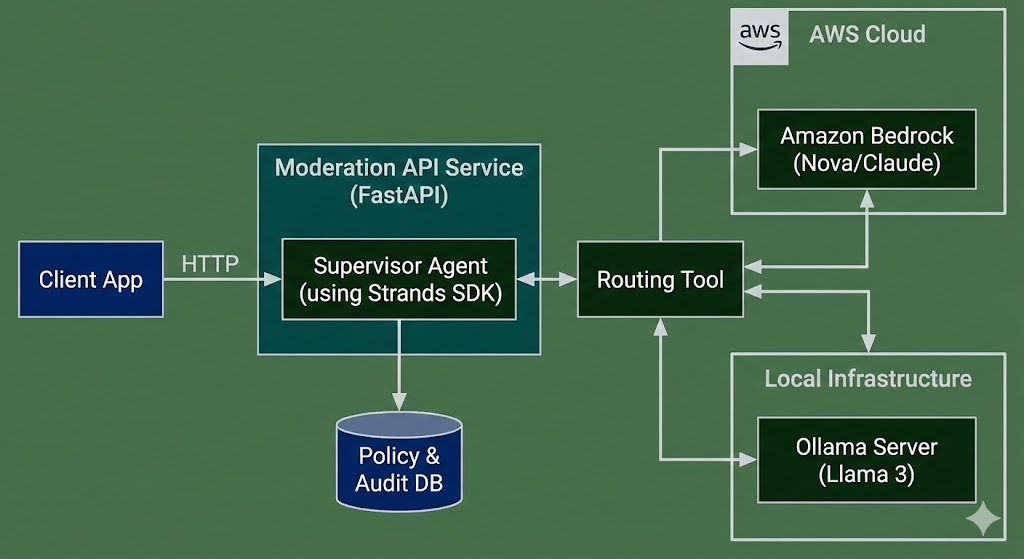

3. Technical Blueprint: The Hybrid Agent Architecture

Our solution utilizes a single, flexible Inference Router at its core, capable of managing both cloud and local resources.

A. The Hybrid Architecture

The system is built as a portable Agent-Tool core, demonstrated via a FastAPI API.

- Routing Logic: A central function (

call_llm_with_policy) acts as a smart router, dispatching requests based on cost or preference: either to AWS Bedrock for high performance or to a Local Ollama Server (running a model like Llama 3) for cost-effective development and testing. - Reliability: Timeout Fixes: Local inference on consumer hardware is slow. We prevent premature failure by setting a large, configurable timeout (e.g., 300 seconds) in our HTTP calls, ensuring heavy batch processing jobs have time to complete.

B. Engineering for Determinism

For moderation, consistency is paramount. We engineer the LLM’s behavior to be deterministic:

- Prompt as Software: The System Prompt provides the model with its strict persona (“expert content moderation system”) and the exact policy rules (the knowledge base).

- Parameter Control: The single most critical parameter is Temperature, which is set to

0.0. This eliminates randomness and creativity, forcing the LLM to output the statistically most probable, and thus most consistent, policy-compliant judgment. - Schema Enforcement: The prompt dictates a specific JSON output schema (e.g.,

{"is_violating": true/false, ...}). This, combined with parsing logic that detects and summarizes JSON Lists (batch output) into a single Dictionary, ensures the API always maintains a clean, parsable contract.

4. Scaling the Solution

The modular architecture is designed for future scaling:

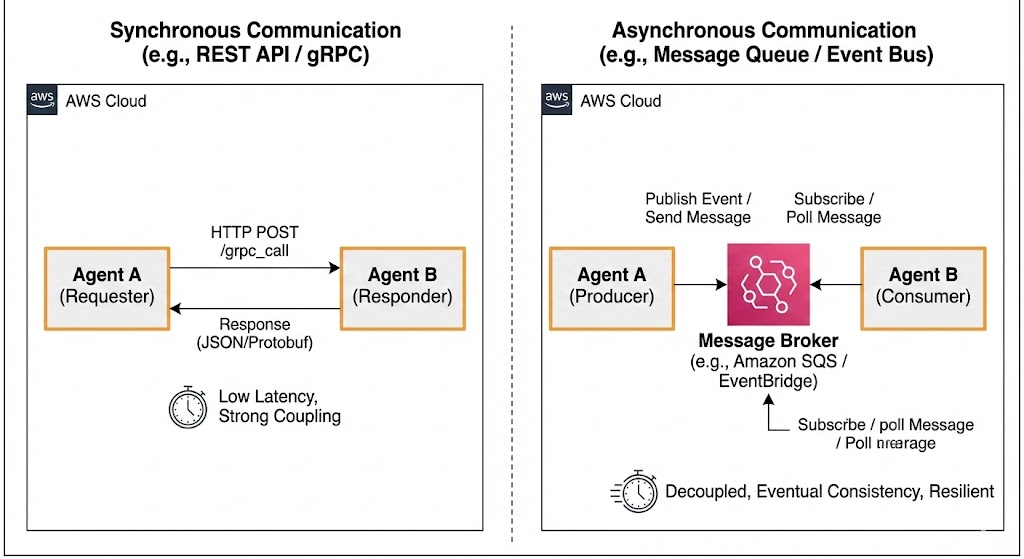

Asynchronous (SQS/EventBridge): Used for long-running, non-blocking tasks (e.g., processing large video files) where latency is acceptable.

Extensibility: Instead of building complex integration webs, future specialized tasks (e.g., video analysis) will leverage the unified nature of AWS Nova models, which can handle all modalities in a single agent.

Multi-Agent Communication: The system can evolve into a multi-agent workflow:

Synchronous (REST/gRPC): Used for real-time, blocking checks (e.g., ensuring a comment is safe before publishing).