Modular Micro-Service (Factory-Service-Agent Pattern) Primary Goal: To provide a strict, production-ready foundation for deterministic AI agents that output structured JSON data rather than conversational text.

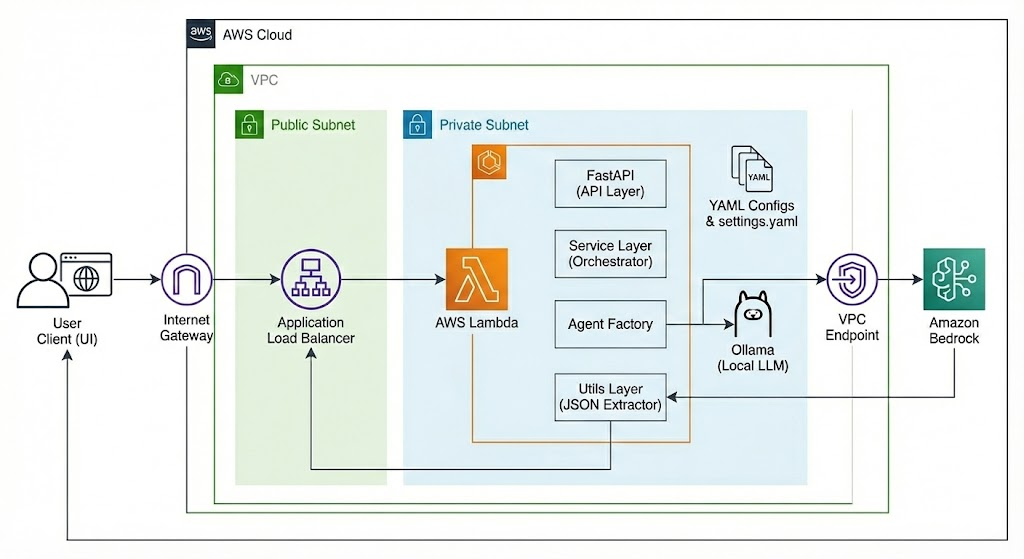

1. System Architecture Overview

The Sentinel Framework is built on the “Strands” philosophy: AI agents should be treated as software components with strict interfaces (Input -> Processing -> JSON Output), not chatbots.

High-Level Data Flow

- Client (UI): Sends a request (Text + Metadata) to the FastAPI backend.

- API Layer: Routes the request to the appropriate Service.

- Service Layer: Orchestrates the logic. It calls the Factory to spin up an Agent.

- Factory: Reads the YAML Configuration, injects system prompts, and attaches the LLM Provider (Bedrock or Ollama).

- Agent (LLM): Processes the input against the strict System Prompt.

- Utils Layer: intercepts the raw LLM output, extracts the JSON payload (using Regex if necessary), and handles errors.

- Response: Clean JSON is returned to the UI.

2. Core Framework Components

These files form the “engine” of the application and rarely need modification.

2.1 The Abstraction Layer (src/lib/strands.py)

This is a lightweight wrapper that creates a unified interface for different LLM providers. It prevents vendor lock-in.

Model(Base Class): Defines the standardchat()method.BedrockModel: Implementschat()usingboto3for AWS Bedrock.OllamaModel: Implementschat()using standard HTTP requests for local inference.Agent: The execution unit. It combines aModelinstance with a specificsystem_prompt.

2.2 The Agent Factory (src/core/factory.py)

The Factory Pattern is used to decouple Agent Definition (YAML) from Agent Instantiation (Python).

- Function:

create_agent(agent_name) - Logic:

- Locates the YAML config file for the requested agent.

- Reads the

roleandsystem_prompt. - Determines the active Provider (AWS vs Local) from the global config.

- Returns a fully initialized

Agentobject ready to run.

2.3 Robust Utilities (src/core/utils.py)

This file ensures reliability, specifically dealing with the non-deterministic nature of LLMs.

extract_json(text): The most critical function. It uses Regex (r"(\{.*\})") to hunt for a JSON object within the LLM’s response. This allows the model to “think” or “chatter” before/after the JSON without breaking the app.set_provider_config(): Manages global state for switching between Cloud and Local inference at runtime.

3. Agent Configuration (YAML)

Agents are defined purely in YAML files located in src/config/agents/. This separates Prompt Engineering from Software Engineering.

Example Structure (moderator.yaml):

YAML

agents:

moderator:

role: "Content Safety Moderator" # The high-level persona

system_prompt: |

# Strict instructions go here.

You are a moderator. Analyze input against the policy.

OUTPUT FORMAT (Strict JSON):

{

"is_violating": boolean,

"reasoning": "string"

}

Key Feature: The prompt explicitly enforces a JSON schema. The utils.py extractor relies on the agent adhering to this schema.

4. The Swarm: Supervisor Pattern

The “Swarm” capability is achieved through a Router-Service Architecture.

4.1 The Router Agent (router.yaml)

- Role: Traffic Controller.

- Task: It does not analyze content depth. It classifies intent.

- Output:

{"route": "SENTIMENT" | "MODERATION"}.

4.2 The Orchestrator (src/agents/swarm_service.py)

This service implements the “Supervisor” logic in Python code:

- Step 1: Calls the

Router Agentwith the raw user input. - Step 2: Parses the JSON decision (

route). - Step 3: Dynamically dispatches the input to either

run_sentiment_analysis()orrun_moderation_check(). - Step 4: Injects metadata (e.g.,

_meta_router_reason) into the final response so the UI knows why a specific agent was chosen.

5. The Code Generator (app_generator_swarm.sh)

This script is a DevOps Scaffolding Tool. It is a self-contained Bash script that automates the entire setup process.

How it works:

- Cleanup: Removes old project directories to ensure a clean build.

- Tree Generation: Creates the standard folder structure (

src/api,src/core,templates, etc.). - File Injection: Uses “Heredocs” (

cat <<EOF > filename) to write the Python code, YAML configs, and HTML templates directly into the new files. - Configuration: Pre-populates

settings.yamlwith safe defaults (Phi-3, Local Provider).

Why use it?

It guarantees that every developer starts with the exact same directory structure, dependencies, and file contents, eliminating “it works on my machine” issues related to missing files.

6. Extending the Framework (How-To)

To add a new capability (e.g., a “Summary Agent”), follow this workflow:

- Define (

src/config/agents/summary.yaml): Create a YAML file defining thesummary_agentwith a system prompt that enforces a JSON output (e.g.,{"summary": "...", "keywords": [...]}). - Implement (

src/agents/summary_service.py): Write a Python functionrun_summary()that:- Initializes the factory with your YAML.

- Creates the agent.

- Calls the agent and runs

extract_json()on the result.

- Expose (

src/api/main.py): Add a new route@app.post("/api/summary")that calls your service function. - Update Swarm (

src/agents/swarm_service.py):- Update the Router’s system prompt to include the new “SUMMARY” category.

- Update the

run_swarm_routerpython logic to handle the newif route == "SUMMARY":case.

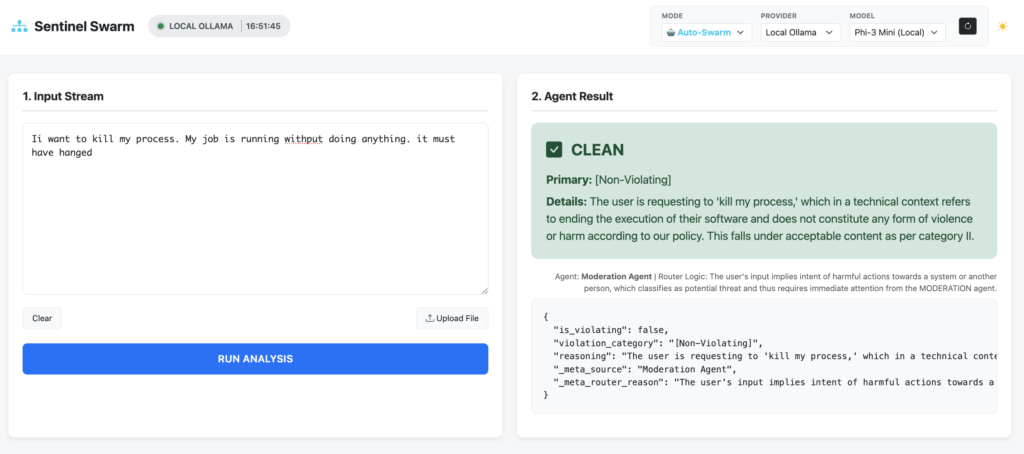

Screenshots