UPDATE – 13/04/2024 – There is a work around which involved updating JSON file using linux tools such as – sed and awk. The file contains array of JSON objects. The issue is that Apache Spark read the whole contents instead of processing each JSON object.

Problem

Get dataset and unzip the dataset i.e. after unzip https://leidata-preview.gleif.org/storage/golden-copy-files/2024/03/13/905647/20240313-0800-gleif-goldencopy-lei2-golden-copy.json.zip# (832M) file size increase to 11GB

- not enough memory available in Google Colab to process this dataset

- json file contains array of json objects and each record is spread across many lines (multilines json object).

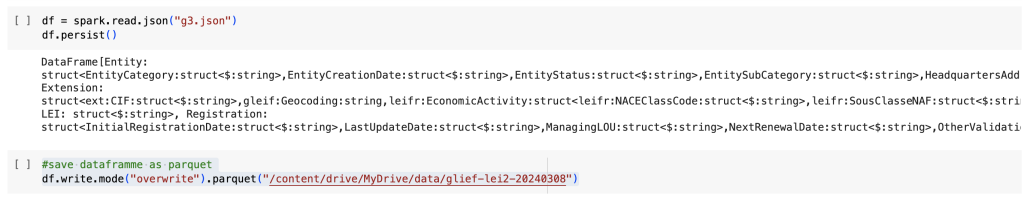

Workaround

- Use sed to replace first and last line (i.e. remove array notation)

- awk to insert newline after end of each json object

- awk to remove leading and trailing white spaces (optional step). It reduces the file size to 6.1 GB

Time taken

It took about 30 mins to read and save data as parquet. Not an ideal solution but does the job.

%%bash

#update first line

sed -i '1 s/^.*$//' /content/20240308-1600-gleif-goldencopy-lei2-golden-copy.json

#update last line

sed -i '$ s/^.*$/}/' /content/20240308-1600-gleif-goldencopy-lei2-golden-copy.json

#insert newline if ^},

awk '{printf "%s",$0} /^},/{printf "\n";}' /content/20240308-1600-gleif-goldencopy-lei2-golden-copy.json > g.json

#remove leading and trailing whitespaces (Optional - reduce the file size to 6.8 GB)

awk '{$1=$1}1' g.json > g3.json

UPDATE – 24/01/2023 – GLIEF golden copy is a very complex json file and needs more driver memory. Using wholeTextFiles may not work – increase driver memory and use spark.read.json(filename,multiLine=True)

- if needed use free storage from Google – need google.colab package for accessing Google Drive

- Get Glief data including sample files from https://www.gleif.org/en/lei-data/gleif-golden-copy/download-the-golden-copy#/

!git clone https://gitlab.com/akkasali910/companieshouse-data

!pip3 install google.colab

- you need to install pyspark in Google Colab

- pip3 install pyspark

- use code below to crerate spark context

from pyspark.sql import SparkSession

from pyspark import SparkContext

from pyspark.sql import functions as F

from pyspark.sql import types as T

from datetime import datetime

import logging

spark = SparkSession.builder\

.config("spark.sql.warehouse.dir","./spark-warehouse") \

.appName('pyspark-cdc')\

.enableHiveSupport()\

.getOrCreate()

- remove metadata

listFiles = dbutils.fs.ls("/databricks/driver/gleif")

dbutils.fm.fm("/databricks/driver/gleif", recurse=True)

Glief file is too large

- reading it using spark.read.json with multiLine=True may not work

- workaround – use WholeTextFiles

from pyspark import StorageLevel

multiline_rdd=sc.wholeTextFiles('dbfs:/FileStore/shared_uploads/info@mirpurutc.com/lei_sample.json')

multiline_rdd.persist(StorageLevel.MEMORY_AND_DISK)

import re

json_rdd = multiline_rdd.map(lambda x : x[1]).map(lambda x : re.sub(r"\s+", "", x,flags=re.UNICODE))

df = spark.read.json(json_rdd)

Processing GLIEF Reporting Exceptions file

# By default, spark considers every record in a JSON file as a fully qualified record in a single line.

#glief_file = 'file:///tmp/20230120-0800-gleif-goldencopy-lei2-golden-copy.json'

glief_file = 'file:///tmp/20230124-0800-gleif-goldencopy-repex-golden-copy.json'

from pyspark import StorageLevel

df = spark.read.json(glief_file,multiLine=True)

df.persist(StorageLevel.MEMORY_AND_DISK)

df.printSchema()

Ref: https://www.gleif.org/en/lei-data/gleif-data-quality-management

Sample golden copy record

{"records":[

{

"LEI": {

"$": "529900P5TAD0ABFTMV10"

},

"Entity": {

"LegalName": {

"@xml:lang": "de",

"$": "Land Hessen"

},

"LegalAddress": {

"@xml:lang": "de",

"FirstAddressLine": {

"$": "Friedrich-Ebert-Allee 8"

},

"City": {

"$": "Wiesbaden"

},

"Region": {

"$": "DE-HE"

},

"Country": {

"$": "DE"

},

"PostalCode": {

"$": "65185"

}

},

"HeadquartersAddress": {

"@xml:lang": "de",

"FirstAddressLine": {

"$": "Friedrich-Ebert-Allee 8"

},

"City": {

"$": "Wiesbaden"

},

"Region": {

"$": "DE-HE"

},

"Country": {

"$": "DE"

},

"PostalCode": {

"$": "65185"

}

},

"RegistrationAuthority": {

"RegistrationAuthorityID": {

"$": "RA999999"

}

},

"LegalJurisdiction": {

"$": "DE"

},

"EntityCategory": {

"$": "RESIDENT_GOVERNMENT_ENTITY"

},

"EntitySubCategory": {

"$": "STATE_GOVERNMENT"

},

"LegalForm": {

"EntityLegalFormCode": {

"$": "8888"

},

"OtherLegalForm": {

"$": "Legal Entity of Public Law"

}

},

"EntityStatus": {

"$": "ACTIVE"

},

"EntityCreationDate": {

"$": "1945-09-19T00:00:00+02:00"

}

},

"Registration": {

"InitialRegistrationDate": {

"$": "2017-07-14T16:41:21+02:00"

},

"LastUpdateDate": {

"$": "2021-05-25T17:07:10.059+02:00"

},

"RegistrationStatus": {

"$": "ISSUED"

},

"NextRenewalDate": {

"$": "2021-07-14T16:41:21+02:00"

},

"ManagingLOU": {

"$": "5299000J2N45DDNE4Y28"

},

"ValidationSources": {

"$": "ENTITY_SUPPLIED_ONLY"

},

"ValidationAuthority": {

"ValidationAuthorityID": {

"$": "RA999999"

}

}

},

"Extension": null

},

{

"LEI": {

"$": "5493007BPZS3LU7J0U61"

},

"Entity": {

"LegalName": {

"@xml:lang": "en",

"$": "Canadian Air Transport Security Authority"

},

"OtherEntityNames": {

"OtherEntityName": [

{

"@xml:lang": "fr",

"@type": "ALTERNATIVE_LANGUAGE_LEGAL_NAME",

"$": "Administration canadienne de la sûreté du transport aérien"

}

]

},

"LegalAddress": {

"@xml:lang": "en",

"FirstAddressLine": {

"$": "Sun Life Financial Centre"

},

"AdditionalAddressLine": [

{

"$": "99 Bank Street"

},

{

"$": "5th Floor"

}

],

"City": {

"$": "Ottawa"

},

"Region": {

"$": "CA-ON"

},

"Country": {

"$": "CA"

},

"PostalCode": {

"$": "K1P 6B9"

}

},

"HeadquartersAddress": {

"@xml:lang": "en",

"FirstAddressLine": {

"$": "Sun Life Financial Centre"

},

"AdditionalAddressLine": [

{

"$": "99 Bank Street"

},

{

"$": "5th Floor"

}

],

"City": {

"$": "Ottawa"

},

"Region": {

"$": "CA-ON"

},

"Country": {

"$": "CA"

},

"PostalCode": {

"$": "K1P 6B9"

}

},

"RegistrationAuthority": {

"RegistrationAuthorityID": {

"$": "RA999999"

}

},

"LegalJurisdiction": {

"$": "CA"

},

"EntityCategory": {

"$": "RESIDENT_GOVERNMENT_ENTITY"

},

"EntitySubCategory": {

"$": "CENTRAL_GOVERNMENT"

},

"LegalForm": {

"EntityLegalFormCode": {

"$": "8888"

},

"OtherLegalForm": {

"$": "Crown Corporation"

}

},

"EntityStatus": {

"$": "ACTIVE"

},

"EntityCreationDate": {

"$": "2002-04-01T00:00:00-04:00"

}

},

"Registration": {

"InitialRegistrationDate": {

"$": "2016-11-01T02:40:00+01:00"

},

"LastUpdateDate": {

"$": "2021-05-25T17:17:17.512+02:00"

},

"RegistrationStatus": {

"$": "ISSUED"

},

"NextRenewalDate": {

"$": "2021-09-01T18:25:00+02:00"

},

"ManagingLOU": {

"$": "EVK05KS7XY1DEII3R011"

},

"ValidationSources": {

"$": "ENTITY_SUPPLIED_ONLY"

},

"ValidationAuthority": {

"ValidationAuthorityID": {

"$": "RA999999"

}

}

},

"Extension": null

}]}Error messages

Reading large JSON file with an array of JSON objects may result in OOM (Java Out of Memory exception). If it does occur then the Java Garbage Collector (GC) will not be able free up memory spaces and you need to restart the Java session again.

ERROR:root:Exception while sending command.

Traceback (most recent call last):

File "/usr/local/lib/python3.10/dist-packages/py4j/clientserver.py", line 516, in send_command

raise Py4JNetworkError("Answer from Java side is empty")

py4j.protocol.Py4JNetworkError: Answer from Java side is empty

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.10/dist-packages/py4j/java_gateway.py", line 1038, in send_command

response = connection.send_command(command)

File "/usr/local/lib/python3.10/dist-packages/py4j/clientserver.py", line 539, in send_command

raise Py4JNetworkError(

py4j.protocol.Py4JNetworkError: Error while sending or receivingSpark Context used to process JSON file on Google CoLab

from pyspark.sql import SparkSession

spark = (SparkSession.builder

.appName('etl')

.config("spark.executor.memory", "8G")

.config("spark.driver.memory", "4G")

.config("spark.serizlizer",

"org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

)Example of Schema

root

|-- Entity: struct (nullable = true)

| |-- EntityCategory: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- EntityCreationDate: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- EntityStatus: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- EntitySubCategory: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- HeadquartersAddress: struct (nullable = true)

| | |-- @xml:lang: string (nullable = true)

| | |-- AdditionalAddressLine: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- $: string (nullable = true)

| | |-- AddressNumber: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- AddressNumberWithinBuilding: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- City: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- Country: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- FirstAddressLine: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- MailRouting: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- PostalCode: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- Region: struct (nullable = true)

| | | |-- $: string (nullable = true)

| |-- LegalAddress: struct (nullable = true)

| | |-- @xml:lang: string (nullable = true)

| | |-- AdditionalAddressLine: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- $: string (nullable = true)

| | |-- AddressNumber: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- AddressNumberWithinBuilding: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- City: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- Country: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- FirstAddressLine: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- MailRouting: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- PostalCode: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- Region: struct (nullable = true)

| | | |-- $: string (nullable = true)

| |-- LegalEntityEvents: struct (nullable = true)

| | |-- LegalEntityEvent: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- @event_status: string (nullable = true)

| | | | |-- @group_id: string (nullable = true)

| | | | |-- @group_sequence_no: string (nullable = true)

| | | | |-- @group_type: string (nullable = true)

| | | | |-- AffectedFields: struct (nullable = true)

| | | | | |-- AffectedField: array (nullable = true)

| | | | | | |-- element: struct (containsNull = true)

| | | | | | | |-- $: string (nullable = true)

| | | | | | | |-- @field_xpath: string (nullable = true)

| | | | |-- LegalEntityEventEffectiveDate: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- LegalEntityEventRecordedDate: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- LegalEntityEventType: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- ValidationDocuments: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- ValidationReference: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| |-- LegalForm: struct (nullable = true)

| | |-- EntityLegalFormCode: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- OtherLegalForm: struct (nullable = true)

| | | |-- $: string (nullable = true)

| |-- LegalJurisdiction: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- LegalName: struct (nullable = true)

| | |-- $: string (nullable = true)

| | |-- @xml:lang: string (nullable = true)

| |-- OtherAddresses: struct (nullable = true)

| | |-- OtherAddress: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- @type: string (nullable = true)

| | | | |-- @xml:lang: string (nullable = true)

| | | | |-- AdditionalAddressLine: array (nullable = true)

| | | | | |-- element: struct (containsNull = true)

| | | | | | |-- $: string (nullable = true)

| | | | |-- AddressNumber: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- AddressNumberWithinBuilding: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- City: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- Country: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- FirstAddressLine: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- MailRouting: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- PostalCode: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- Region: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| |-- OtherEntityNames: struct (nullable = true)

| | |-- OtherEntityName: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- $: string (nullable = true)

| | | | |-- @type: string (nullable = true)

| | | | |-- @xml:lang: string (nullable = true)

| |-- RegistrationAuthority: struct (nullable = true)

| | |-- OtherRegistrationAuthorityID: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- RegistrationAuthorityEntityID: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- RegistrationAuthorityID: struct (nullable = true)

| | | |-- $: string (nullable = true)

| |-- SuccessorEntity: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- SuccessorEntityName: struct (nullable = true)

| | | | |-- $: string (nullable = true)

| | | | |-- @xml:lang: string (nullable = true)

| | | |-- SuccessorLEI: struct (nullable = true)

| | | | |-- $: string (nullable = true)

| |-- TransliteratedOtherAddresses: struct (nullable = true)

| | |-- TransliteratedOtherAddress: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- @type: string (nullable = true)

| | | | |-- @xml:lang: string (nullable = true)

| | | | |-- AdditionalAddressLine: array (nullable = true)

| | | | | |-- element: struct (containsNull = true)

| | | | | | |-- $: string (nullable = true)

| | | | |-- AddressNumber: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- AddressNumberWithinBuilding: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- City: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- Country: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- FirstAddressLine: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- MailRouting: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- PostalCode: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- Region: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| |-- TransliteratedOtherEntityNames: struct (nullable = true)

| | |-- TransliteratedOtherEntityName: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- $: string (nullable = true)

| | | | |-- @type: string (nullable = true)

| | | | |-- @xml:lang: string (nullable = true)

|-- Extension: struct (nullable = true)

| |-- ext:CIF: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- gleif:Geocoding: string (nullable = true)

| |-- leifr:EconomicActivity: struct (nullable = true)

| | |-- leifr:NACEClassCode: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- leifr:SousClasseNAF: struct (nullable = true)

| | | |-- $: string (nullable = true)

| |-- leifr:FundManagerBusinessRegisterID: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- leifr:FundNumber: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- leifr:LegalFormCodification: struct (nullable = true)

| | |-- $: string (nullable = true)

| | |-- @uri: string (nullable = true)

| |-- leifr:SIREN: struct (nullable = true)

| | |-- $: string (nullable = true)

|-- LEI: struct (nullable = true)

| |-- $: string (nullable = true)

|-- Registration: struct (nullable = true)

| |-- InitialRegistrationDate: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- LastUpdateDate: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- ManagingLOU: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- NextRenewalDate: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- OtherValidationAuthorities: struct (nullable = true)

| | |-- OtherValidationAuthority: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- OtherValidationAuthorityID: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- ValidationAuthorityEntityID: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| | | | |-- ValidationAuthorityID: struct (nullable = true)

| | | | | |-- $: string (nullable = true)

| |-- RegistrationStatus: struct (nullable = true)

| | |-- $: string (nullable = true)

| |-- ValidationAuthority: struct (nullable = true)

| | |-- OtherValidationAuthorityID: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- ValidationAuthorityEntityID: struct (nullable = true)

| | | |-- $: string (nullable = true)

| | |-- ValidationAuthorityID: struct (nullable = true)

| | | |-- $: string (nullable = true)

| |-- ValidationSources: struct (nullable = true)

| | |-- $: string (nullable = true)